smallwheels

Well-known member

I've recently spent some time getting rid of unwanted traffic on my forums and thought maybe the learnings might have value for someone else, so I am writing them up. This is not intended as a tutorial or even advice - it is just a couple of finding that you may find useful or not. Also, there are many ways to Rome, depending from your situation, needs and abilities. So take it with a grain of salt.

Important race conditions for my actions: My forum is pretty small (currently ~2.000 registered users), runs on shared hosting (which limits my possibilities in terms of configuration), I do not use Cloudflare (and do not want to) and my user base comes to 99,8% from German-speaking countries in Central Europe.

So first: What do I consider as "unwanted traffic"? As probably most of us: Spammers, bot registrations, (automated) content-scrapers, cracking attempts but also bot traffic from SEO companies, AI-training bots and most other bots that are neither a search engine or of other legitimate fair use purpose.

In fact wanting to exclude AI training bots from the forum is where it started. I installed the excellent "known bots" add on by @Sim a long time ago (all add ons I used are linked at the bottom of this post). It identifies all kinds of bots using the user agent they submit and provides a list of the latest 100 bots that visited you forum. This way you get (with some manual work) a good overview of what's floating around. For many of them there is a helpful link provided that explains which purpose the bot serves - it takes manual klicking around and reading, so there's time involved but it is a good start.

So what I did (and still do) is to go through the list like once a week, identify the bots I consider unwanted and add them to the "deny"-list in my robots.txt. This way I got rid of a good bunch of unwanted bots - but this approach is not enough:

First, it fully depends from the bot being cooperative. If the bot does not follow or respect the robots.txt it will fail - and it turned out that while many bots do respect the robots.txt a relevant part does not, namely the nastier SEO bots but also some bots like "facebook external hit".

Second, there are some bots where you can't tell what they do as they are built on generic libraries and they submit those as the user agent. These show up as i.e. "okhttp", "Python Requests Library", "Go-http-client", "python-httpx", etc.. So you have no idea what those do, no idea if they come from one author/source or many different ones, if they are from a single private hobbyist (and so probably ok) or a malicious scraper - but you can assume that they will probably not respect the robots.txt. However, I added them anyway - doesn't harm (but has indeed no effect as it turned out). We'll deal with them later...

Third - and this is a big loophole - an "evil" bot will probably not identify itself using a useragent "evil-bot". The more cleverly made ones will in opposite try to hide using a common user agent, either from browsers that human users use or from a legitimate and well accepted (or even wanted) bot like i.e. the Google indexing bot. Again, we'll speak later about how to deal with that blind spot. For the moment it is sufficent to know: The Known Bots add on is very helpful but due to it's nature limited at some points.

In fact, there is a new "standard" trying to establish: Analogue to robots.txt there is the possibility of creating an "ai.txt" where you can simply tell ai-bots that you do not want them. However: It is neither well known nor well established and I do have my doubts that ai bots will follow it (and it seems not to be checked often it at all according to the logs). I created one anyway - does cost nothing, is done fast and does no harm:

For getting rid of spam registrations I bought and installed the "Registration Spaminator" and "Login Spaminator" add ons by @Ozzy47 - and immediately hell broke lose. I did not suffer from many successful spam registrations until then but from time to time one came through plus the occasions when I had to deal with those caught by the mechanisms built into XF and awaiting manual action grew just enough to annoy me. With Ozzy's add ons manual work is gone completely and not a single spam registration came through. However: Now I see the full amount of unsuccessful tries that was hidden until now. ATM, after about 3,5 months, the registration spaminator does show ~26.000 unsuccessful registration attempts by bots and the login spaminator ~12.000 unsuccessful bot login attempts.

There is absolutely no need to do anything (as the add ons perform successfully and bravely) but as the logs do also provide the IP addresses those bots use and offer an easy way to look up the IPs I was curious and dived into it a bit. A couple of things turned out:

• Many of the attempts came recurringly from the same IP addresses

• most of them belonged to hosting providers in Russia

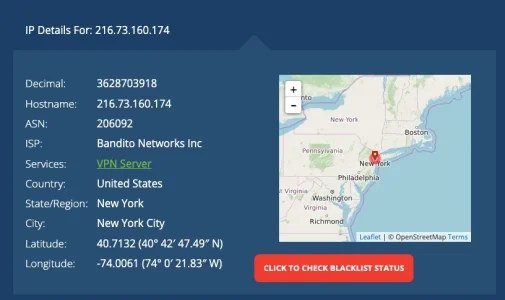

• apart from that there were a limited but recognizable amount of VPN endpoints in various countries and occasionally some from countries like China, India, Indonesia, Taiwan, and - more rarely - from the US, UAE, Aegypt and Ukraine

This is were I started my counter-measures. My forum users are almost exclusively from German speaking countries plus a few com from other European countries (including UK) and a handful from the US. So I installed the add on Geoblock Registrations, again by @Sim, and excluded a small bunch of countries from registering, in case a spammer may get around Ozzy's add ons. Just as a second line of defense and due to the nature of my user base no collateral damage to be expected.

On top of that I added some of the more notorious IPs to a freshly created deny list in my .htaccess. This is a bit of a dangerous game for two reasons:

• IPs get often reassigned relatively quickly, so one might block legitimate traffic after a short while. Plus obviously many spammers will just switch the IP they are using if they are locked out.

• the .htaccess is read and used on every single visit to the forum. If it is too big or too complex this may increase load times for legitimate users which obviously is undesireable.

However, at the level of traffic on my forum it should not be an issue plus the size of my .htaccess is still fine.

Coming back to the unwanted bots: I did the same with the unwanted bots from the list "Known Bots" created that did not follow the robots.txt (btw. many claimed on the website they would respect the robots.txt but in fact did not. Various SEO-companies notoriously but also i.e. the Chat GPT training bot - or a bot using this as it's user agent). A very special case is Meta's "facebook external hit" bot: It claims to respect the robots.txt most of the time but sometimes not. It claims to have the purpose of creating previews for content from your forum shared on facebook - but at the same time to be used for "security checks" and other purposes - so it even does not rule out to be used for training Facebook's AI model. To get this needs sorrowful reading - this thing seems indeed to be a trojan horse. Other companies have different bots for different purposes.

To get those IPs the bots are using I had to grep them from the web.log of the server using the user-agent as filter criteria (as the Kown Bots add on does not provide the IPs). Some of the serious businesses that use bots do tell the IPs or IP ranges their bots use on their webpages. This comes in handy as this way you can block it out completly while with the approach via the web.log you only get the IPs one by one which is a bit annoying. BTW: A lot of the companies that send bots to your forum do not like bots on their own websites - they use cloudflare's "I am a human check". Double standards I'd say - and a clear hint that you do not want THEIR bots on YOUR website as well..

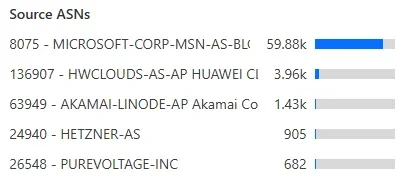

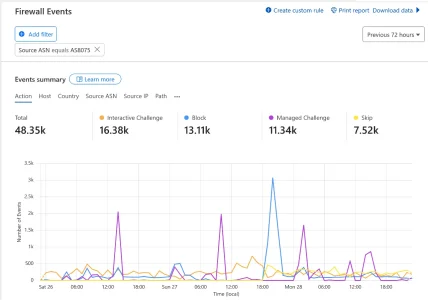

An interesting thing in that respect were the "gerneric library based bots" I mentioned earlier. It turned out that they were quite massive in the attempts to scrape forum content and that many of them were using IPs from the big cloud providers. A lot of attempts came from AWS, mainly China-based, but also Microsoft, Digital Ocean and even Oracle. Plus again loads from Russian providers. And they were mostly using multiple IPs from the same network blocks of the ISP/hoster in question.

So again I took advantage from being a small forum, pushed the IPs in question into whois and simply blocked the whole network block the IP belonged to via .htaccess in most cases. Again a bit of a dangerous game, especially with providers like AWS as many companies are using their services - so there is danger of collateral damage, but so be it.

Coincidently I innocently installed the "custom 404-page" add on by @Siropu. The intention was - you may guess it - to create a custom 404-page but it turned out that it is another source of knowledge: It provides a log of calls that ended in a 404 and much to my surprise many of those were very awkward URLs. A lot obvious cracking attempts, calling pages or files under i.e. /wp-admin/, /wp-install/, /wp-content/, /wp-plugins/ or /wp-includes/ that don't exist on my server as I do not run Wordpress and never have on this domain. Same goes for some other URLs like "superadmin.php" and many more - all of them nonexistent (else they would not get a 404 and not be listed in the log of the add on). Grepping the web.log for those patterns opened a new can of worms. An enormous amount of calls from various IPs. Again, as before, many of them located at the cloud providers and many in Russia. But this time even a net block of Kaspersky was part of the game - and also a IP that belongs to Cloudfare. Nework blocks from Microsoft were pretty promient as source of the requests here as well as a couple of providers who claim to have their offices at Seychelle islands - fr decades already a pretty easy and obvious sign of a rough hosting company.

As for whatever reason the log of the 404-add on only rarely delivers an IP address (but sometimes does) a grep-job is unavoidable here. In fact it turned out that many of those hid behind generic user-agents and thus are not listed by "Known Bots".

In fact those calls do no real harm - what they target at is not there, so nothing can happen. But then they fill my logs with crap and bring unwanted load to the server for no reason. Indeed they do the latter as they often send out hundreds of requests per minute and as this goes on for a while this adds up. So I went the same route as before and blocked either the IP or the whole network block, depending from the output of whois, the geographic location, the number of IPs involved and the frequency of the occurence. For the two reasons above plus a third one: If there comes nasty stuff from a certain area of the net repeatedly probability is, that there will come more, and different nasty stuff from that area in future (and maybe already does but I did not filter for it in the logs, so I don't know about it). Locking them out completely is the simplest way of dealing with it.

To simplify things I will probably create a rule in .htaccess that simply blocks any call to those WP-directories, so I don't have to fiddle around with single IPs.

These measures collectively brought down unwanted traffic and behavior massively - at least for the moment. This will need constant readjustment of the robots.txt and the .htaccess but in general seem to be a success. How demaning the adjustment needs will be stays to be seen. Basically I followed a bunch of patterns:

• user agent

• behaviour

• geographical location

• kind of ip-adress (dialup, hoster, VPN, cloud provider, mobile)

In some cases all 4 patterns hit, in some less than that. And it turned out that many of the IPs caught in one of the Spaminator add ons also did scan for wordpress weaknesses or grabbed content. BTW: While IP V6 addresses did turn up from time to time the vast majority was IP V4.

I do have no proof but the impression that most of those requests probably came from just a hand full number of different persons (or rather criminal organizations), using changing IP addressess over time. Too similar were the patterns during registration or the URLs they crawled automatically. And it seems that most of them have their source in Russiain the end - a country notoriously famous as a common and pretty safe home for all kinds of internet crime for decades already. There are other sources as well, but in comparison these are few.

There are still some loose ends. I.e. in the log of the custom 404-page add on I see some strange behaviour: A series of calls to existing URLs of threads but with a random patterns at the end that then get's rotated with various endings like i.e.

Threadurl/sh1xw6es60qi

Threadurl/sh1xw6es60qi.php

Threadurl/sh1xw6es60qi.jsp

Threadurl/sh1xw6es60qi.html

I see this for various URLs (threads, tags, media) with various random parts. No idea what the intention behind this may be. Also I see calls for things in /.well-known/* like

$forum-URL/.well-known/old/

$forum-URL/.well-known/pki-validation/autoload_classmap.php

$forum-URL/.well-known/init

$forum-URL/.well-known/.well-known/owlmailer.php

$forum-URL/.well-known/pki-validation/file.php

$forum-URL/.well-known/pki-validation/sx.php

$forum-URL/.well-known/old/pki-validation/xmrlpc.php

and many, many more. While most of them seem very fishy at least some of them seem genuine as they do come i.e. directly from Google and seem to refer to something android related in this case. Plus there is legitimate use for calls to .well-known. i.e. in case your forum acts as a SSO provider (which mine does not).

It was an interesting dive into a rabbit hole - did probably not solve many relevant real world problems but created a bit of learning of what's going on unseen. One learning on top of that is that in comparison to my time as a professional admin decades ago things have become more miserable. Back then AI did not exist, nor did cloud providers or bots to a relevant degree apart from indexing bots of search engines or publicly usable VPNs. Evil players would (when stupid) use their own IP or - the more clever ones - misconfigured open proxies, hacked servers, rough providers or private computers behind dialu-ps that were turned into zombies via malware. One could approach abuse desks easily and they were appropriately skilled and took action quickly and effectively. Today, things have become way more complex and everybody is using AWS and alike, the goodies as well as the baddies. Getting in touch with one of those hosters has become useless, staff is incompetent and/or uninterested and hides behind a wall of inappropriate contact forms. So today I do not bother to even try to contact an abuse desk anymore and simply lock out to the best of my abilities and possibilities.

Also it is a sad truth that the big cloud companies do earn parts of their revenue serving the dirty part of the web that does harm to others. It may not be their intention and - due to the at-scale size and very automated business model of the cloud providers is hard to avoid - but AWS, Microsoft, Oracle, Digital Ocean and obviously even Cloudlflare are not the white heads that they claim to be. They earn dirty money, they profit from illegal and fraudulent behaviour of their customers and clearly could do a lot more to avoid it. But this would negatively influence their profits...

What could be quite nice would be the possiblity to include a continuously updated 3rd party blacklist of known rough- or spam-IPs to the .htaccess (much in the way as it is or was common for mailservers). However: I did not dive into this, it seems not to be common or straightforward and possibly could lead to performance issues. Would still be interested in trying it out.

The add ons I used:

Important race conditions for my actions: My forum is pretty small (currently ~2.000 registered users), runs on shared hosting (which limits my possibilities in terms of configuration), I do not use Cloudflare (and do not want to) and my user base comes to 99,8% from German-speaking countries in Central Europe.

So first: What do I consider as "unwanted traffic"? As probably most of us: Spammers, bot registrations, (automated) content-scrapers, cracking attempts but also bot traffic from SEO companies, AI-training bots and most other bots that are neither a search engine or of other legitimate fair use purpose.

In fact wanting to exclude AI training bots from the forum is where it started. I installed the excellent "known bots" add on by @Sim a long time ago (all add ons I used are linked at the bottom of this post). It identifies all kinds of bots using the user agent they submit and provides a list of the latest 100 bots that visited you forum. This way you get (with some manual work) a good overview of what's floating around. For many of them there is a helpful link provided that explains which purpose the bot serves - it takes manual klicking around and reading, so there's time involved but it is a good start.

So what I did (and still do) is to go through the list like once a week, identify the bots I consider unwanted and add them to the "deny"-list in my robots.txt. This way I got rid of a good bunch of unwanted bots - but this approach is not enough:

First, it fully depends from the bot being cooperative. If the bot does not follow or respect the robots.txt it will fail - and it turned out that while many bots do respect the robots.txt a relevant part does not, namely the nastier SEO bots but also some bots like "facebook external hit".

Second, there are some bots where you can't tell what they do as they are built on generic libraries and they submit those as the user agent. These show up as i.e. "okhttp", "Python Requests Library", "Go-http-client", "python-httpx", etc.. So you have no idea what those do, no idea if they come from one author/source or many different ones, if they are from a single private hobbyist (and so probably ok) or a malicious scraper - but you can assume that they will probably not respect the robots.txt. However, I added them anyway - doesn't harm (but has indeed no effect as it turned out). We'll deal with them later...

Third - and this is a big loophole - an "evil" bot will probably not identify itself using a useragent "evil-bot". The more cleverly made ones will in opposite try to hide using a common user agent, either from browsers that human users use or from a legitimate and well accepted (or even wanted) bot like i.e. the Google indexing bot. Again, we'll speak later about how to deal with that blind spot. For the moment it is sufficent to know: The Known Bots add on is very helpful but due to it's nature limited at some points.

In fact, there is a new "standard" trying to establish: Analogue to robots.txt there is the possibility of creating an "ai.txt" where you can simply tell ai-bots that you do not want them. However: It is neither well known nor well established and I do have my doubts that ai bots will follow it (and it seems not to be checked often it at all according to the logs). I created one anyway - does cost nothing, is done fast and does no harm:

Code:

# $myforum content is made available for your personal, non-commercial

# use subject to our Terms of Service here:

# https://$myforum/help/terms/

# Use of any device, tool, or process designed to data mine or scrape the content

# using automated means is prohibited without prior written permission.

# Prohibited uses include but are not limited to:

# (1) text and data mining activities under Art. 4 of the EU Directive on Copyright in

# the Digital Single Market;

# (2) the development of any software, machine learning, artificial intelligence (AI),

# and/or large language models (LLMs);

# (3) creating or providing archived or cached data sets containing our content to others; and/or

# (4) any commercial purposes.

User-Agent: *

Disallow: /

Disallow: *For getting rid of spam registrations I bought and installed the "Registration Spaminator" and "Login Spaminator" add ons by @Ozzy47 - and immediately hell broke lose. I did not suffer from many successful spam registrations until then but from time to time one came through plus the occasions when I had to deal with those caught by the mechanisms built into XF and awaiting manual action grew just enough to annoy me. With Ozzy's add ons manual work is gone completely and not a single spam registration came through. However: Now I see the full amount of unsuccessful tries that was hidden until now. ATM, after about 3,5 months, the registration spaminator does show ~26.000 unsuccessful registration attempts by bots and the login spaminator ~12.000 unsuccessful bot login attempts.

There is absolutely no need to do anything (as the add ons perform successfully and bravely) but as the logs do also provide the IP addresses those bots use and offer an easy way to look up the IPs I was curious and dived into it a bit. A couple of things turned out:

• Many of the attempts came recurringly from the same IP addresses

• most of them belonged to hosting providers in Russia

• apart from that there were a limited but recognizable amount of VPN endpoints in various countries and occasionally some from countries like China, India, Indonesia, Taiwan, and - more rarely - from the US, UAE, Aegypt and Ukraine

This is were I started my counter-measures. My forum users are almost exclusively from German speaking countries plus a few com from other European countries (including UK) and a handful from the US. So I installed the add on Geoblock Registrations, again by @Sim, and excluded a small bunch of countries from registering, in case a spammer may get around Ozzy's add ons. Just as a second line of defense and due to the nature of my user base no collateral damage to be expected.

On top of that I added some of the more notorious IPs to a freshly created deny list in my .htaccess. This is a bit of a dangerous game for two reasons:

• IPs get often reassigned relatively quickly, so one might block legitimate traffic after a short while. Plus obviously many spammers will just switch the IP they are using if they are locked out.

• the .htaccess is read and used on every single visit to the forum. If it is too big or too complex this may increase load times for legitimate users which obviously is undesireable.

However, at the level of traffic on my forum it should not be an issue plus the size of my .htaccess is still fine.

Coming back to the unwanted bots: I did the same with the unwanted bots from the list "Known Bots" created that did not follow the robots.txt (btw. many claimed on the website they would respect the robots.txt but in fact did not. Various SEO-companies notoriously but also i.e. the Chat GPT training bot - or a bot using this as it's user agent). A very special case is Meta's "facebook external hit" bot: It claims to respect the robots.txt most of the time but sometimes not. It claims to have the purpose of creating previews for content from your forum shared on facebook - but at the same time to be used for "security checks" and other purposes - so it even does not rule out to be used for training Facebook's AI model. To get this needs sorrowful reading - this thing seems indeed to be a trojan horse. Other companies have different bots for different purposes.

To get those IPs the bots are using I had to grep them from the web.log of the server using the user-agent as filter criteria (as the Kown Bots add on does not provide the IPs). Some of the serious businesses that use bots do tell the IPs or IP ranges their bots use on their webpages. This comes in handy as this way you can block it out completly while with the approach via the web.log you only get the IPs one by one which is a bit annoying. BTW: A lot of the companies that send bots to your forum do not like bots on their own websites - they use cloudflare's "I am a human check". Double standards I'd say - and a clear hint that you do not want THEIR bots on YOUR website as well..

An interesting thing in that respect were the "gerneric library based bots" I mentioned earlier. It turned out that they were quite massive in the attempts to scrape forum content and that many of them were using IPs from the big cloud providers. A lot of attempts came from AWS, mainly China-based, but also Microsoft, Digital Ocean and even Oracle. Plus again loads from Russian providers. And they were mostly using multiple IPs from the same network blocks of the ISP/hoster in question.

So again I took advantage from being a small forum, pushed the IPs in question into whois and simply blocked the whole network block the IP belonged to via .htaccess in most cases. Again a bit of a dangerous game, especially with providers like AWS as many companies are using their services - so there is danger of collateral damage, but so be it.

Coincidently I innocently installed the "custom 404-page" add on by @Siropu. The intention was - you may guess it - to create a custom 404-page but it turned out that it is another source of knowledge: It provides a log of calls that ended in a 404 and much to my surprise many of those were very awkward URLs. A lot obvious cracking attempts, calling pages or files under i.e. /wp-admin/, /wp-install/, /wp-content/, /wp-plugins/ or /wp-includes/ that don't exist on my server as I do not run Wordpress and never have on this domain. Same goes for some other URLs like "superadmin.php" and many more - all of them nonexistent (else they would not get a 404 and not be listed in the log of the add on). Grepping the web.log for those patterns opened a new can of worms. An enormous amount of calls from various IPs. Again, as before, many of them located at the cloud providers and many in Russia. But this time even a net block of Kaspersky was part of the game - and also a IP that belongs to Cloudfare. Nework blocks from Microsoft were pretty promient as source of the requests here as well as a couple of providers who claim to have their offices at Seychelle islands - fr decades already a pretty easy and obvious sign of a rough hosting company.

As for whatever reason the log of the 404-add on only rarely delivers an IP address (but sometimes does) a grep-job is unavoidable here. In fact it turned out that many of those hid behind generic user-agents and thus are not listed by "Known Bots".

In fact those calls do no real harm - what they target at is not there, so nothing can happen. But then they fill my logs with crap and bring unwanted load to the server for no reason. Indeed they do the latter as they often send out hundreds of requests per minute and as this goes on for a while this adds up. So I went the same route as before and blocked either the IP or the whole network block, depending from the output of whois, the geographic location, the number of IPs involved and the frequency of the occurence. For the two reasons above plus a third one: If there comes nasty stuff from a certain area of the net repeatedly probability is, that there will come more, and different nasty stuff from that area in future (and maybe already does but I did not filter for it in the logs, so I don't know about it). Locking them out completely is the simplest way of dealing with it.

To simplify things I will probably create a rule in .htaccess that simply blocks any call to those WP-directories, so I don't have to fiddle around with single IPs.

These measures collectively brought down unwanted traffic and behavior massively - at least for the moment. This will need constant readjustment of the robots.txt and the .htaccess but in general seem to be a success. How demaning the adjustment needs will be stays to be seen. Basically I followed a bunch of patterns:

• user agent

• behaviour

• geographical location

• kind of ip-adress (dialup, hoster, VPN, cloud provider, mobile)

In some cases all 4 patterns hit, in some less than that. And it turned out that many of the IPs caught in one of the Spaminator add ons also did scan for wordpress weaknesses or grabbed content. BTW: While IP V6 addresses did turn up from time to time the vast majority was IP V4.

I do have no proof but the impression that most of those requests probably came from just a hand full number of different persons (or rather criminal organizations), using changing IP addressess over time. Too similar were the patterns during registration or the URLs they crawled automatically. And it seems that most of them have their source in Russiain the end - a country notoriously famous as a common and pretty safe home for all kinds of internet crime for decades already. There are other sources as well, but in comparison these are few.

There are still some loose ends. I.e. in the log of the custom 404-page add on I see some strange behaviour: A series of calls to existing URLs of threads but with a random patterns at the end that then get's rotated with various endings like i.e.

Threadurl/sh1xw6es60qi

Threadurl/sh1xw6es60qi.php

Threadurl/sh1xw6es60qi.jsp

Threadurl/sh1xw6es60qi.html

I see this for various URLs (threads, tags, media) with various random parts. No idea what the intention behind this may be. Also I see calls for things in /.well-known/* like

$forum-URL/.well-known/old/

$forum-URL/.well-known/pki-validation/autoload_classmap.php

$forum-URL/.well-known/init

$forum-URL/.well-known/.well-known/owlmailer.php

$forum-URL/.well-known/pki-validation/file.php

$forum-URL/.well-known/pki-validation/sx.php

$forum-URL/.well-known/old/pki-validation/xmrlpc.php

and many, many more. While most of them seem very fishy at least some of them seem genuine as they do come i.e. directly from Google and seem to refer to something android related in this case. Plus there is legitimate use for calls to .well-known. i.e. in case your forum acts as a SSO provider (which mine does not).

It was an interesting dive into a rabbit hole - did probably not solve many relevant real world problems but created a bit of learning of what's going on unseen. One learning on top of that is that in comparison to my time as a professional admin decades ago things have become more miserable. Back then AI did not exist, nor did cloud providers or bots to a relevant degree apart from indexing bots of search engines or publicly usable VPNs. Evil players would (when stupid) use their own IP or - the more clever ones - misconfigured open proxies, hacked servers, rough providers or private computers behind dialu-ps that were turned into zombies via malware. One could approach abuse desks easily and they were appropriately skilled and took action quickly and effectively. Today, things have become way more complex and everybody is using AWS and alike, the goodies as well as the baddies. Getting in touch with one of those hosters has become useless, staff is incompetent and/or uninterested and hides behind a wall of inappropriate contact forms. So today I do not bother to even try to contact an abuse desk anymore and simply lock out to the best of my abilities and possibilities.

Also it is a sad truth that the big cloud companies do earn parts of their revenue serving the dirty part of the web that does harm to others. It may not be their intention and - due to the at-scale size and very automated business model of the cloud providers is hard to avoid - but AWS, Microsoft, Oracle, Digital Ocean and obviously even Cloudlflare are not the white heads that they claim to be. They earn dirty money, they profit from illegal and fraudulent behaviour of their customers and clearly could do a lot more to avoid it. But this would negatively influence their profits...

What could be quite nice would be the possiblity to include a continuously updated 3rd party blacklist of known rough- or spam-IPs to the .htaccess (much in the way as it is or was common for mailservers). However: I did not dive into this, it seems not to be common or straightforward and possibly could lead to performance issues. Would still be interested in trying it out.

The add ons I used:

Ban or moderate users from specific countries when registering using Maxmind's GeoLite2 Database

- Sim

- geoblocking geoip geolite2 maxmind registration

- Add-ons [2.x]

Customize your 404 error page, keep track of missing URLs and redirect them to new pages.

- Siropu

- 404 error 404 not found 404 redirect

- Add-ons [2.x]

Last edited: