[021] ChatGPT Autoresponder [Deleted]

- Thread starter 021

- Start date

colcar

Active member

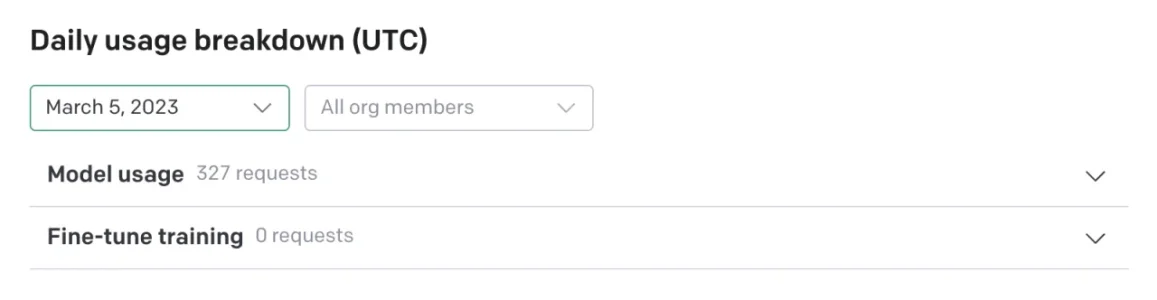

Thanks for the info. Is a request equal to asking one question of the bot?Sample usage for March 5:

View attachment 282557

ChatGPT Bot is only enabled on less than five forums out of more than 200 nodes.

16K+ users log in daily.

colcar

Active member

Yeah I checked the demo and it looks like the API knowledge cutoff date is the same as the standard. Was hoping it was up to date because I wanted to use it to generate quizzes for my football forum but it thinks our club manager is the same guy who was sacked 3 managers ago.You can sort of check, ask ChatGPT versions what the latest nginx version is or some known software and it usually lists the date of the version. ChatGPT free GUI was around October 2021 when it first launched, but once ChatGPT Plus was launched, free and paid is around September 2021 for GUI at least. No idea on API version.

Looks like it.Thanks for the info. Is a request equal to asking one question of the bot?

colcar

Active member

80 cents for 300 questions. Could get expensive quickly unless you figured out a way to monetize the feature on your forum.Looks like it.

ProCom

Well-known member

I don't know about this addon, but his other addon, you can set per usergroup as a whole, or combinations of per usergroup per node.I see that permissions are granted per node, does this mean I do not have the option to grant permissions to certain usergroups?

Yup, totally understand. Even with the tiny usage of our moderators testing it out (the other addon), I can see how tons of users leveraging it every day could add up.Yeah % of messages might not be as high but all relative. Just being wary of bill shock

Ya, I guess "expensive" is very relative compared to the value it provides for the users/community. If the other addon proves to be "valuable" to our community (users posting the content and receivers of the content) I could see it being a value-add to our paid-memberships as a new extra feature for them.Could get expensive quickly unless you figured out a way to monetize the feature on your forum.

colcar

Active member

Yeah you're right, I can see the utility of this addon, that's why I'm hoping it can be restricted for use by certain usergroups - i.e. paying members.I don't know about this addon, but his other addon, you can set per usergroup as a whole, or combinations of per usergroup per node.

Yup, totally understand. Even with the tiny usage of our moderators testing it out (the other addon), I can see how tons of users leveraging it every day could add up.

Ya, I guess "expensive" is very relative compared to the value it provides for the users/community. If the other addon proves to be "valuable" to our community (users posting the content and receivers of the content) I could see it being a value-add to our paid-memberships as a new extra feature for them.

Mr Lucky

Well-known member

As you can set the forum/s where the big replies, presumably you only allow paying upgraded users to those forums.Yeah you're right, I can see the utility of this addon, that's why I'm hoping it can be restricted for use by certain usergroups - i.e. paying members.

colcar

Active member

On my forum currently we have ads on the forum and members that upgrade to paid membership have the ads removed along with a few other perks such as post edit permissions etcAs you can set the forum/s where the big replies, presumably you only allow paying upgraded users to those forums.

I don't think I would want to have a separate forum just for use of this addon but if that's the only option then I suppose I would give it a try.

Would very much prefer the option to restrict access to the Chat bot using usergroups though, seems the standard way of doing everything else.

Mr Lucky

Well-known member

I don't think I would want to have a separate forum just for use of this addon but if that's the only option then I suppose I would give it a try.

I that was what you implied by this:

that's why I'm hoping it can be restricted for use by certain usergroups

If you had such a forum where your paid members can post threads, but others can only view, then you may get mow upgrades if people see the value of the bot (be it useful info or just fun)

I have a dedicated forum. I think it would annoy members to have the bot answering everywhere.

Starbucks

Well-known member

For those that are afraid to end up being billed with a crazy high price, you can easily set up a limit in the OpenAI settings:

platform.openai.com

platform.openai.com

OpenAI Platform

Explore developer resources, tutorials, API docs, and dynamic examples to get the most out of OpenAI's platform.

021

Well-known member

Oh my god gentlemen, I'm amazed how active this thread was tonight

Unfortunately, I can't read all of your posts as I'm working on an update.

I see a lot of people wanting to get the bot to respond to mention and the ability to turn off AI in topics. Both of these features are accepted and will appear in the add-on in the future. To avoid further duplicate feature requests and structure all requests, I created a special forum section. So now if you want to make a suggestion regarding my add-ons, I will ask you to do so exclusively in this section.

If you contacted me with an individual question here, please do so again at devsell.io

Love <3

Unfortunately, I can't read all of your posts as I'm working on an update.

I see a lot of people wanting to get the bot to respond to mention and the ability to turn off AI in topics. Both of these features are accepted and will appear in the add-on in the future. To avoid further duplicate feature requests and structure all requests, I created a special forum section. So now if you want to make a suggestion regarding my add-ons, I will ask you to do so exclusively in this section.

If you contacted me with an individual question here, please do so again at devsell.io

Love <3

Starbucks

Well-known member

You’ve done an amazing job.Oh my god gentlemen, I'm amazed how active this thread was tonight

Unfortunately, I can't read all of your posts as I'm working on an update.

I see a lot of people wanting to get the bot to respond to mention and the ability to turn off AI in topics. Both of these features are accepted and will appear in the add-on in the future. To avoid further duplicate feature requests and structure all requests, I created a special forum section. So now if you want to make a suggestion regarding my add-ons, I will ask you to do so exclusively in this section.

If you contacted me with an individual question here, please do so again at devsell.io

Love <3

Actually, yesterday I was about to write a developer to develop a similar plugin, but then I suddenly came across your plugin. This was actually what I was looking for.

I’ve bought yours, and it’s great! Thank you!

colcar

Active member

Thanks, I'll probably end up doing that if there's no way to allow access via usergroup permissions.I that was what you implied by this:

If you had such a forum where your paid members can post threads, but others can only view, then you may get mow upgrades if people see the value of the bot (be it useful info or just fun)

I have a dedicated forum. I think it would annoy members to have the bot answering everywhere.

ProCom

Well-known member

LOL, you clearly struck while the AI iron is red-hot right now!Oh my god gentlemen, I'm amazed how active this thread was tonight

BRILLIANT! Can't wait to test it!I see a lot of people wanting to get the bot to respond to mention

ProCom

Well-known member

Very cool / smart idea!I created a special forum section. So now if you want to make a suggestion regarding my add-ons, I will ask you to do so exclusively in this section.

If you contacted me with an individual question here, please do so again at devsell.io

Where would you like us to post ideas that aren't yet fleshed-out into "suggestions"? For example:

I'm wondering if there's a reason to limit the quantity or velocity of usage per-member? OpenAi has this already built into ChatGPT (I've received the "too many in one hour" response before on the free version)... and I'm curious if something like that makes sense here?

At least for me, I'm not planning to roll-out any of these tools to people that I don't trust not to abuse it... but I can see how some forums that have it wide-open could run into problems with members just having a hay-day going crazy with it.

021

Well-known member

You can ask questions about the add-on here, in the thread on my forum or in private messages.Where would you like us to post ideas that aren't yet fleshed-out into "suggestions"? For example:

This decision should be made solely by you, depending on the specific circumstances. This question is not directly related to the add-on, it is more about how your forum should be organized. Everything is simple here: if you have reasons to impose restrictions, you should introduce restrictions, if not, you should not.I'm wondering if there's a reason to limit the quantity or velocity of usage per-member? OpenAi has this already built into ChatGPT (I've received the "too many in one hour" response before on the free version)... and I'm curious if something like that makes sense here?

021

Well-known member

021 updated [021] ChatGPT Autoresponder with a new update entry:

1.2.0

Read the rest of this update entry...

1.2.0

This version requires the installed add-on [021] ChatGPT Framework 1.2.0+

Added the "Max responses per thread" option, which will allow you to limit the number of bot responses in one topic.

Values for "Temperature" option are limited to values from 0 to 1 with 0.1 step.

The bot's context is limited to the last 10 posts before the post it is replying to. This is done to avoid the token limit in long threads.

Now the error logs will contains a response from OpenAI, which will allow...

Read the rest of this update entry...

ProCom

Well-known member

I posted this in the requests at the site here, but also wanted to post the idea in this thread for anybody not on that site to get their thoughts and feedback:

One of the most used/suggested tools with the LLM's is to do "Role Prompting" and other pre-training.

Would it be possible to have an "advanced" settings area in this (and your other AI addons) where we could do some pretraining, like:

"You are a forum-assistant robot name: RickyRobot. Your job is to reply with factual information, but always in a very friendly and helpful way. You know about every topic, but you are especially an expert in fixing cars. If given the opportunity, you might create a joke about being a mechanic."

This kind of thing would align GREAT when associating the bot to a specific "member" and having the member's name (and personality) match with the bot's replies and personality.

One of the most used/suggested tools with the LLM's is to do "Role Prompting" and other pre-training.

Would it be possible to have an "advanced" settings area in this (and your other AI addons) where we could do some pretraining, like:

"You are a forum-assistant robot name: RickyRobot. Your job is to reply with factual information, but always in a very friendly and helpful way. You know about every topic, but you are especially an expert in fixing cars. If given the opportunity, you might create a joke about being a mechanic."

This kind of thing would align GREAT when associating the bot to a specific "member" and having the member's name (and personality) match with the bot's replies and personality.

Similar threads

- Replies

- 1

- Views

- 455

- Replies

- 7

- Views

- 765